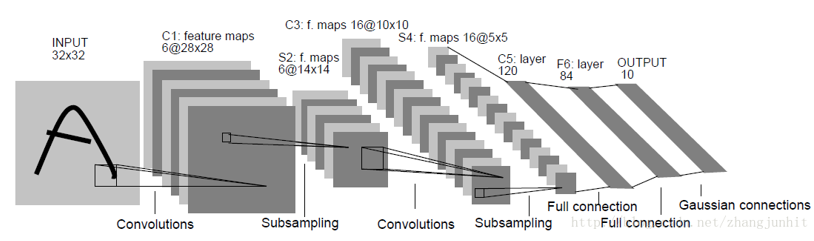

Lenet5的网络结构图如下

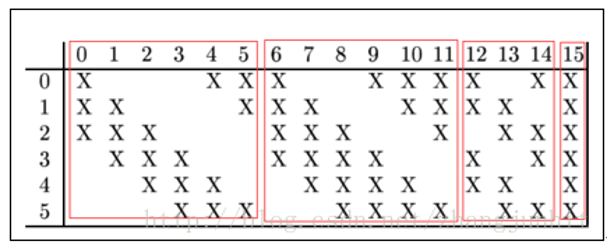

第一个池化层(S2)和第二个卷积层(C3)之间的连接表如下:

大家看下Tiny-DNN的代码,如下所示,代码结构非常清晰得表达了连接表的逻辑,

static void construct_net(tiny_dnn::network<tiny_dnn::sequential> &nn,

tiny_dnn::core::backend_t backend_type) {

// connection table [Y.Lecun, 1998 Table.1]

#define O true

#define X false

// clang-format off

static const bool tbl[] = {

O, X, X, X, O, O, O, X, X, O, O, O, O, X, O, O,

O, O, X, X, X, O, O, O, X, X, O, O, O, O, X, O,

O, O, O, X, X, X, O, O, O, X, X, O, X, O, O, O,

X, O, O, O, X, X, O, O, O, O, X, X, O, X, O, O,

X, X, O, O, O, X, X, O, O, O, O, X, O, O, X, O,

X, X, X, O, O, O, X, X, O, O, O, O, X, O, O, O

};

// clang-format on

#undef O

#undef X

// construct nets

//

// C : convolution

// S : sub-sampling

// F : fully connected

// clang-format off

using fc = tiny_dnn::layers::fc;

using conv = tiny_dnn::layers::conv;

using ave_pool = tiny_dnn::layers::ave_pool;

using tanh = tiny_dnn::activation::tanh;

using tiny_dnn::core::connection_table;

using padding = tiny_dnn::padding;

nn << conv(32, 32, 5, 1, 6, // C1, 1@32x32-in, 6@28x28-out

padding::valid, true, 1, 1, 1, 1, backend_type)

<< tanh()

<< ave_pool(28, 28, 6, 2) // S2, 6@28x28-in, 6@14x14-out

<< tanh()

<< conv(14, 14, 5, 6, 16, // C3, 6@14x14-in, 16@10x10-out

connection_table(tbl, 6, 16),

padding::valid, true, 1, 1, 1, 1, backend_type)

<< tanh()

<< ave_pool(10, 10, 16, 2) // S4, 16@10x10-in, 16@5x5-out

<< tanh()

<< conv(5, 5, 5, 16, 120, // C5, 16@5x5-in, 120@1x1-out

padding::valid, true, 1, 1, 1, 1, backend_type)

<< tanh()

<< fc(120, 10, true, backend_type) // F6, 120-in, 10-out

<< tanh();

}而在github或者其他网络上看到Python实现的代码,几乎都没有表达这个连接表的逻辑,所以挺困惑,

是因为S2到C3之间不需要按照连接表来进行吗?